A Spring Cloud Compatible Service Mesh

Background

At present, the service mesh technology on the market is mostly used to solve the problem of service management and governance under the microservice system architecture, it mainly includes the following sub-systems:

Traffic Scheduling System

- Service registry and discovery system

- Traffic routing, A/B test, blue-green deployment, canary deployment (full path traffic coloring and labeling)

- East-west Traffic Sidecar. Traffic hijackings, such as Istio, non-traffic hijacking, and Alibaba’s open-source MOSN

- Service instance load balancing (polling/weighted polling/consistent hash/random, etc.)

Resilience & Fault-tolerant System

- Rate Limiting. Limit the rate of incoming requests.

- Circuit Breaker. When a system is seriously struggling, failing fast is better than making clients wait.

- Retry. Many faults are transient and may self-correct after a short delay.

- Timeout Control. Beyond a certain wait interval, a successful result is unlikely.

- Health Check. determining the service is healthy or not.

- Cache. Some proportion of requests may be similar.

Observation System

- Distributed Tracing. such as Zipkin, Jaeger, OpenTracing etc.

- Traffic Metrics. such as service latency/error rate/total number of requests/request error code distribution

- Logs. such as access log, error log, system log, etc.

Security system

- Authentication. e.g. mTLS, the privilege to access services

- Authorization. determine whether the service has the authority to access other services

- Encryption. Inter-service communication encryption

Issues

Currently, the Service Mesh on the market is mostly based on the Kubernetes solution. Moreover, it provides a non-intrusive and cross-language-level solution. We believe that the current solutions on the market have the following problems:

- Lacking complete service-inside observability capabilities. From the service-level angle. Not only collecting the application metrics(such as throughput, latency, error rate, etc) but also need to understand how services access the database, cache, message queue, etc. In addition, it also contains the corresponding tracing log. And all of the metrics and logs must be correlated with each other.

- Canary deployment is too simple and too naive, not practical. The real canary deployment should be able to perform based on specific users who are marked by a specific label. And the canary traffic need be passed to entire services not only from north to south but also from east to west.

- Migration problem for Java community. Especially Spring Cloud has a different architecture with the Kubernetes-based service mesh. To migrate it, it has to drop the Spring Cloud solutions, such as service registry & discovery, configuration management, resilient & fault-tolerant, and so on. However, Spring Cloud is more mature and more enterprising than the current service mesh.

- Complicated development environment. Compared with Spring Cloud development, the current service mesh is tightly coupled with Kubernetes, so if developers need to debug/test their code, they need a Kubernetes environment.

- Traffic hijacking based on `iptables` is not an efficient solution.

iptableshas efficiency problems and will consume too many kernel resources. And it is very hard to maintain and trouble-shooting.

Comparison

Apparently, both Spring Cloud and Service Mesh have advantages and disadvantages in their respective. If we could combine them, allow users to take the capabilities of the two solutions, we could have maximum capabilities.

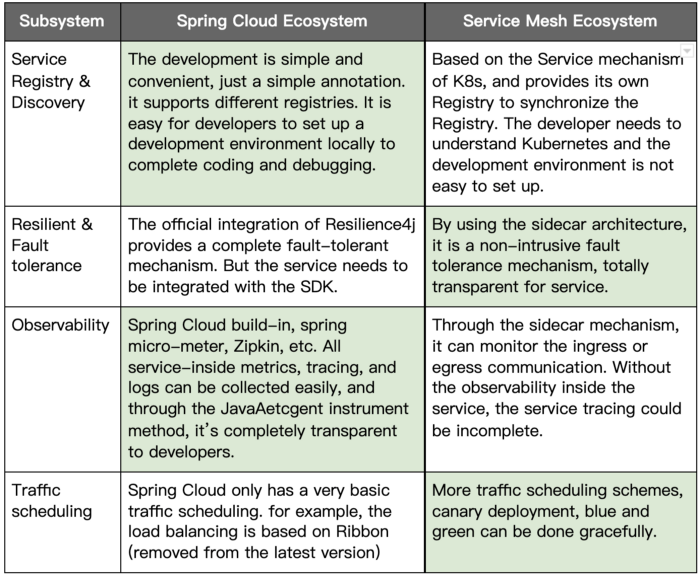

The following table is the comparison between Spring Cloud & Service Mesh.

From the comparison of the above table, we can understand the pros and cons. However, engineering is a kind of tradeoff we have to face every day. This comparison might be unfair because Service Mesh needs to solve a cross-language, cross-platform solution, but Spring Cloud is just an Application-level solution based on Java.

In other words, the versatility of Service Mesh has to be compromised in some of its capabilities, and the professionalism of Spring Cloud can be specialized in its area.

We think that there might be a solution where people need not have to make black-and-white decisions, especially the dilemma between Spring Cloud and Kubernetes-based Service Mesh.

In addition, Java and Spring are so powerful and mature, so many enterprises deeply and widely use Java in their critical business, such as finance, e-commerce, telecom, manufactory, retails…etc. Java community has the most popular and largest ecosystem, we think the Service Mesh should be compatible with it.

So, we decided to develop a new type of Service Mesh that can be orchestrated by Kubernetes but can be completely compatible with Spring Cloud. In other words, the spring cloud application can be migrated to the service mesh smoothly without cost.

EaseMesh

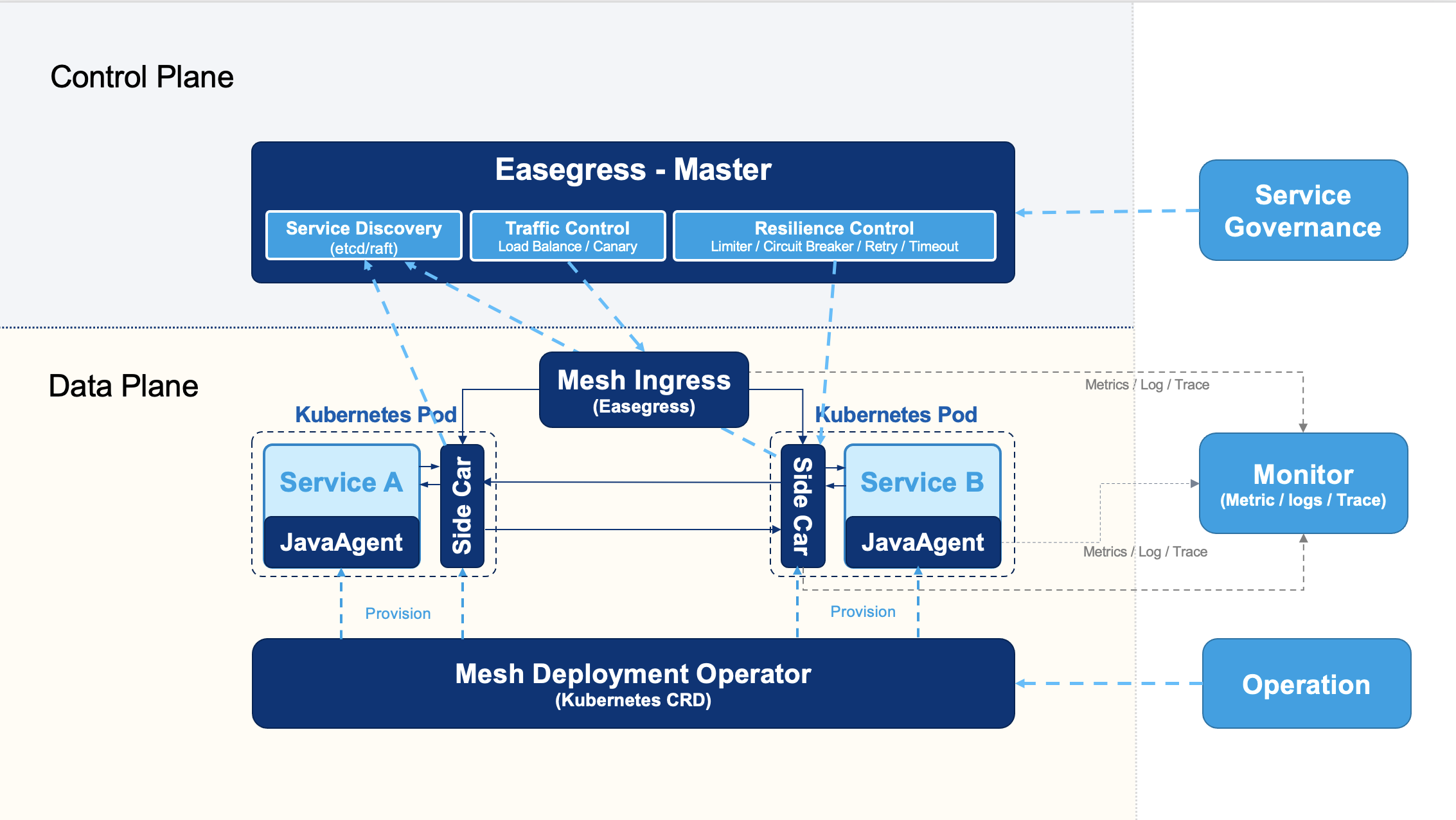

EaseMesh is a Service Mesh that is compatible with the Spring Cloud ecosystem. It uses Easegress (refer to The Next Generation Service Gateway) as the sidecar and traffic gateway, and the EaseAgent as a JavaAgent for service-inside monitoring.

Both Sidecar and JavaAgent are non-intrusive technology, in other words, Ease Mesh could migrate the Spring Cloud application to service mesh without one line source code changes, and the whole architecture has been empowered with full-functional service governance, resilient design, and completed Observability.

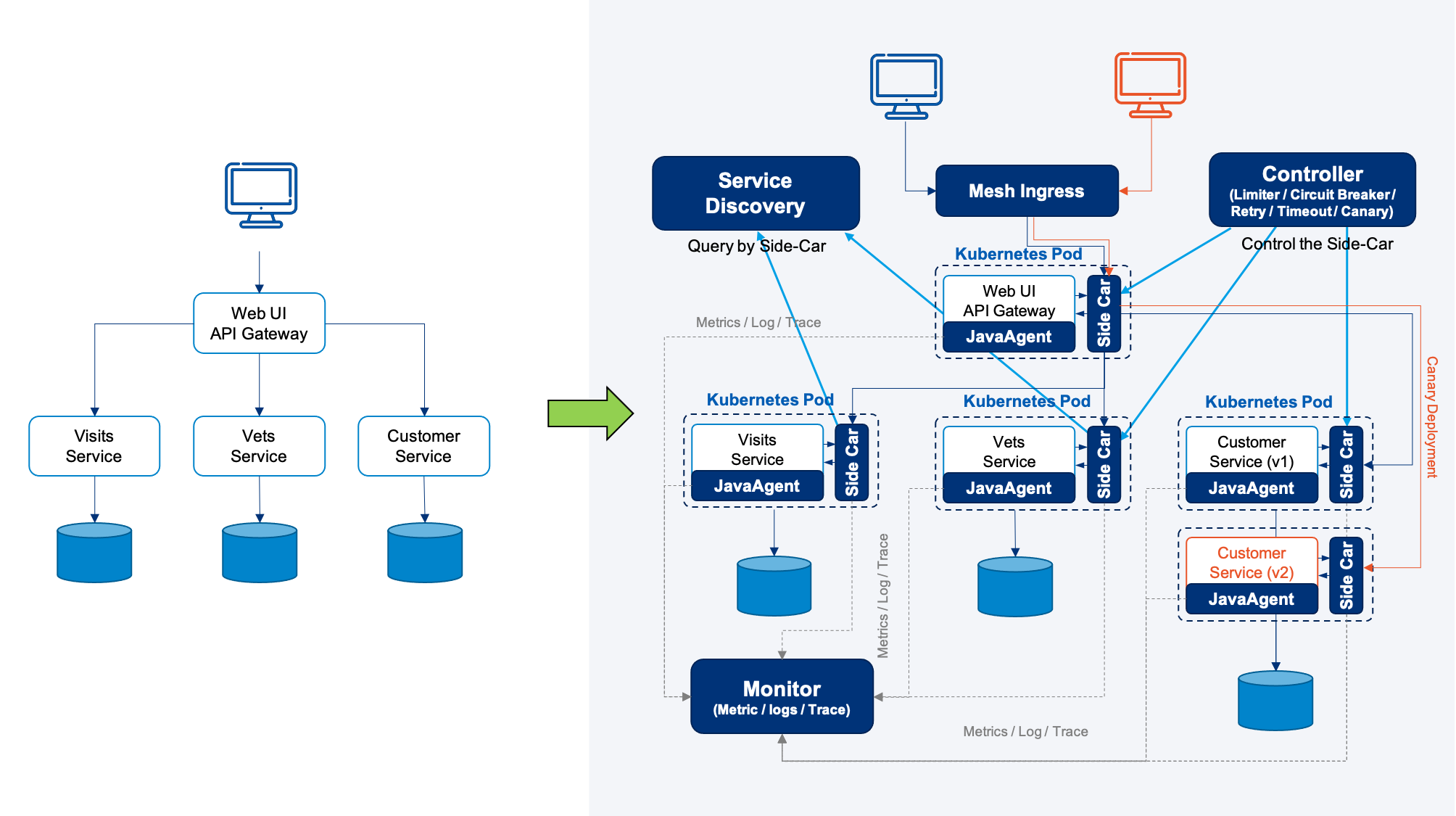

The following diagram shows an example.

On the left, the diagram shows the “Spring Cloud PetClinic” microservice example. It’s the Spring Cloud family, likes Spring Cloud Gateway, Spring Cloud Circuit Breaker, Spring Cloud Config, Spring Cloud Sleuth, Resilience4j, Micrometer, and Eureka Service Discovery from Spring Cloud Netflix technology stack.

By using EaseMesh’s Kubernetes CRD (Custom Resource Definition), with just a few command lines, the whole Spring Cloud Application is migrated to the Service Mesh.

And the service discovery, configuration management, traffic scheduling, resiliency & fault-tolerant sidecar, full-stack monitoring(metrics, logs, tracing), and the whole stack canary deployment are all assembled. (the right part of the above diagram shows the architecture)

Now, let’s walk through the details:

Automatically assemble sidecar and JavaAgent. By using Kubernetes CRD, it’s very easy to install the sidecar and JavaAgent to a Java application transparently.

EaseMesh uses Easegress for service governance. Easegress brings the following major features.

- Application-level Service Discovery — Completely compatible with Java’s service registry and discovery — Eureka, Console, Nacos, etc.

- Raft Consensus. Easegress has a build-in Raft protocol, the master nodes would be the service registry and discovery, the slave nodes would be sidecar.

- Resilience Design. Easegress ports the [resilience4j](https://github.com/resilience4j/resilience4j) from Java to Go, so it brings those fault tolerance functions — such as circuit breaker, rate limiter, retry, etc.

- Traffic Orchestration. Easegress could color the north-south and east-west traffic, and sync up all over the cluster. this can make the cluster-wide canary deployment.

Elegant Traffic Hijack. Unlike other Service Mesh use `iptables` to hijack the traffic, Ease Mesh simply reconfigures/Hijacks the service discovery endpoint to the sidecar, and Ease Mesh returns the sidecar IP address(127.0.0.1) as the remote service. the sidecar takes the responsibility for real service discovery and remote communication. This solution won’t bring any network complexity.

The following diagram shows the architecture

For more details, please refer to the GitHub repo — https://github.com/megaease/easemesh, it is under Apache 2.0 license.

With the advantages of both Spring Cloud and Kubernetes, it’s zero cost to manage, orchestrate & monitor the Java application with mature and enterprise-level architecture.

Beyond this, we could do many advanced enterprise solutions.

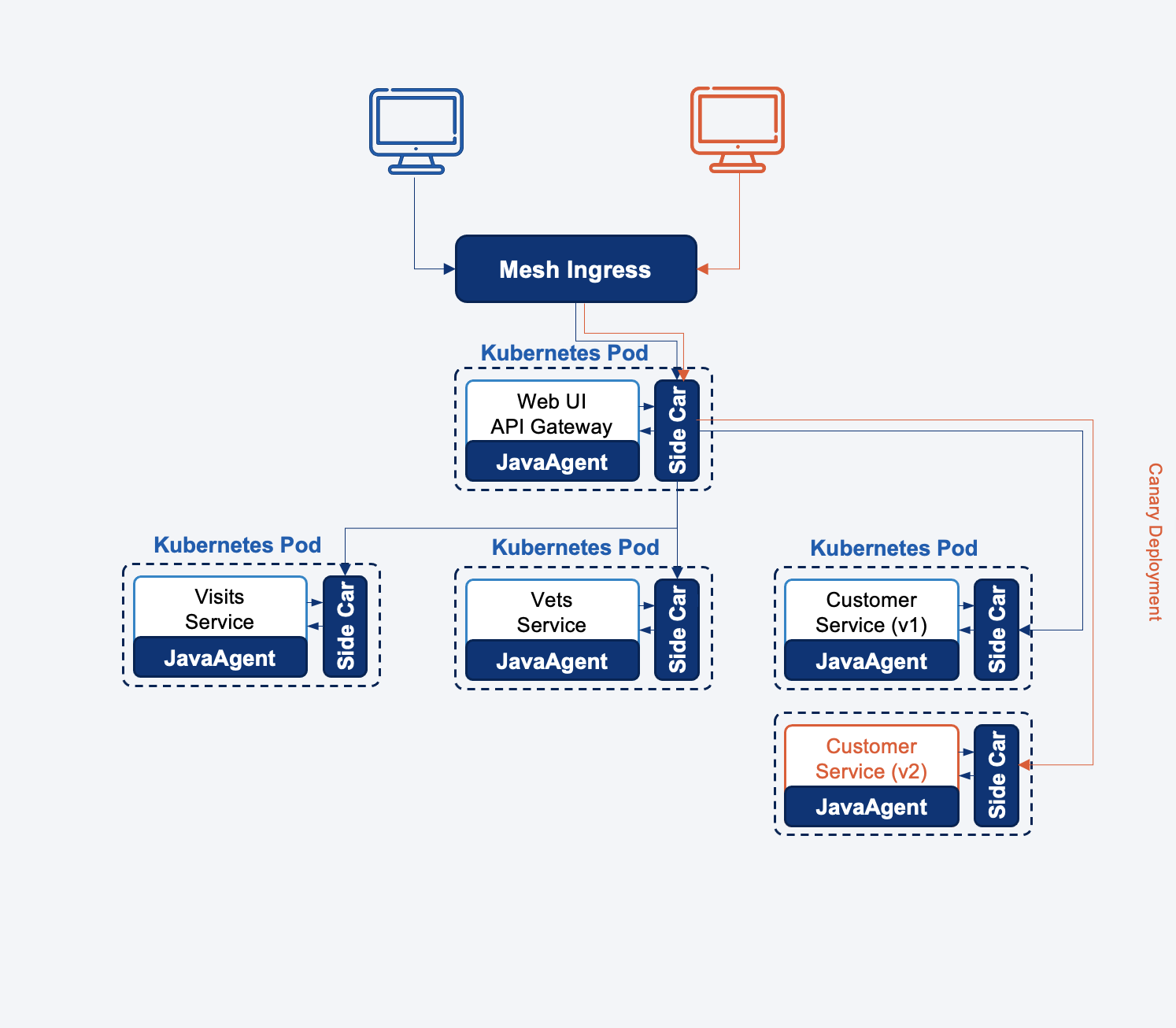

Canary Deployment

The first thing is the canary deployment, canary deployment is a technology we could limit the new version feature only can be seen by certain users, this can reduce the risk. To do a good canary release, we need the following features:

- User Labels: a mechanism that defines the user’s labels used to recognize the user of whether it is the canary. The good label must use user-side data, such as User-Agent, Cookie, User Token, Location, etc.

- Service Versions Discovery: we need to manage the service version and understand which version is for canary users.

- Traffic Scheduling: a gateway can check the user and route the request to the right service instances.

With EaseMesh, we could easy to accomplish this without any code changes. You can check this demonstration document to understand how to do this. https://github.com/megaease/easemesh#72-canary-deployment

Performance Test on Production

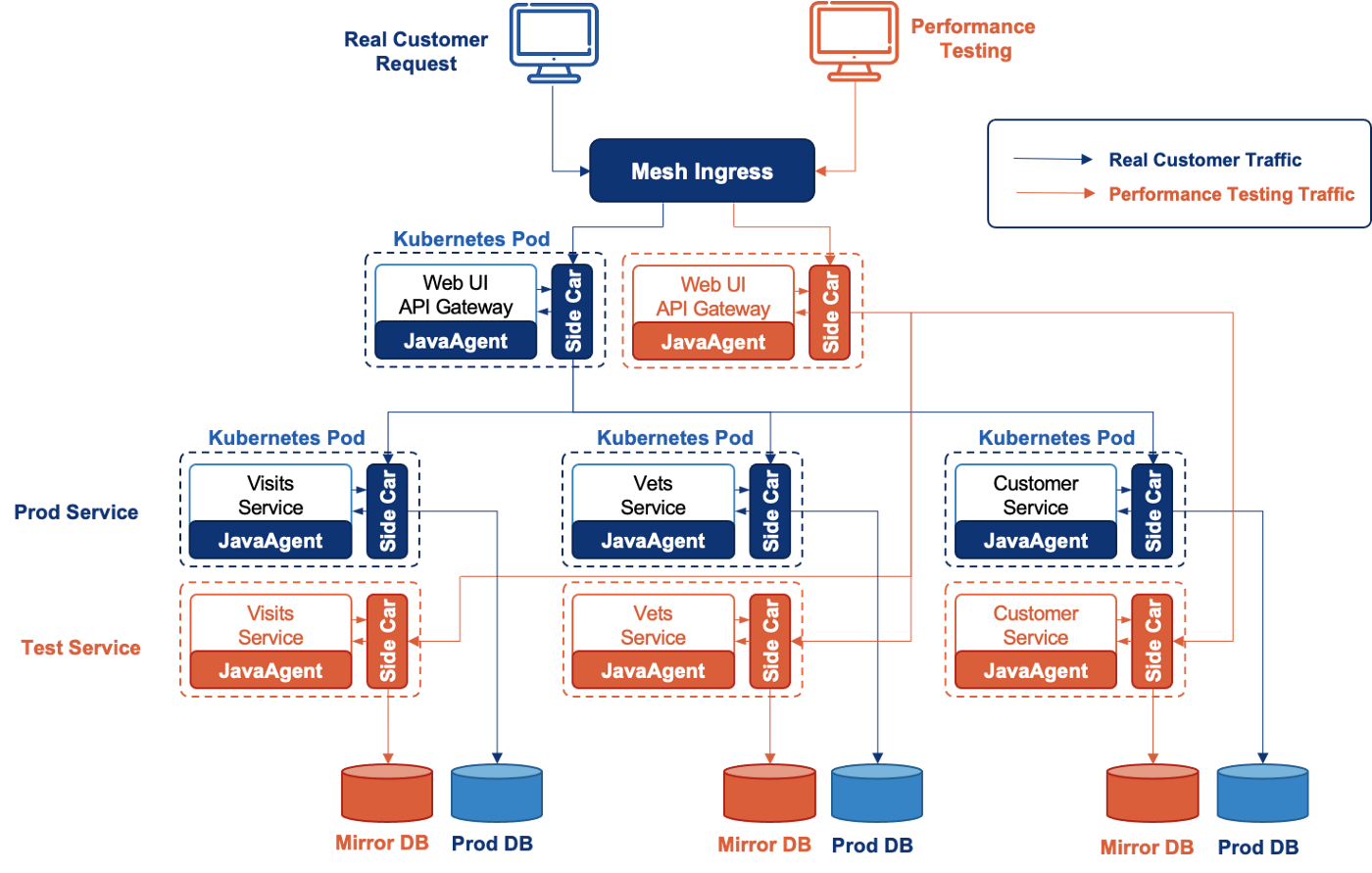

The second thing is running a performance test on production.

In a microservice architecture, it’s a high cost to set up a full environment which same as production to do the performance test for the whole site. So, if we can run the performance test on production, that would be fantastic.

However, running a performance test on production could be very challenged, and we need to solve the following problems.

- Won’t impact the real customer. The performance test could consume lots of resources, it could make a big impact on our customers.

- Test data can be easy to clean. The performance test could bring tons of data, those data need to be cleaned.

With EaseMesh, we could do the following things.

- Deploy the shadow service for all services.

- Mirror all of the middleware, such as DB, Queue, Cache systems.

- Using JavaAgent or Sidecar hijack the traffic to the Mirror Middleware.

- Scheduling the performance test traffic to shadow service.

The following diagram shows how EaseMesh accomplishes this.

We can see the following works EaseMesh helps:

- At first, we can clone all of the Middleware (DB, Queue, Cache, etc) those services depend on, we call them “Shadow Middleware”

- Secondly, EaseMesh deploys the test service for all of the services, we call them “Shadow Services”.

- Then, EaseMesh redirects the middleware access of Shadow Services to “Shadow Middleware”

- Then, EaseMesh schedules the test traffics to Shadow Services and Shadow Middleware.

- Finally, we could safely and easily remove all the “Shadow Service” and the “Shadow Middleware”.

Without changing any source code, EaseMesh brings the perfect solution for performance tests on production, and it’s convenient, easy, and safe.

Furthermore, we could do more and more such kind things with Ease Mesh, we are looking forward to your suggestion or concern about this new Service Mesh, and even help us make it great. Please free feel to file an issue or contribute the code in our Github — https://github.com/megaease/easemesh